Designing innovative solutions and business models is a demanding undertaking, including facing complex problems, understanding unknown domains and cooperating with experts from various backgrounds. But nonetheless organisations must face these challenges to stay relevant and not get left behind by the competition. This calls for methods and tools to support organisations in ideating and designing and developing such solutions and business model.

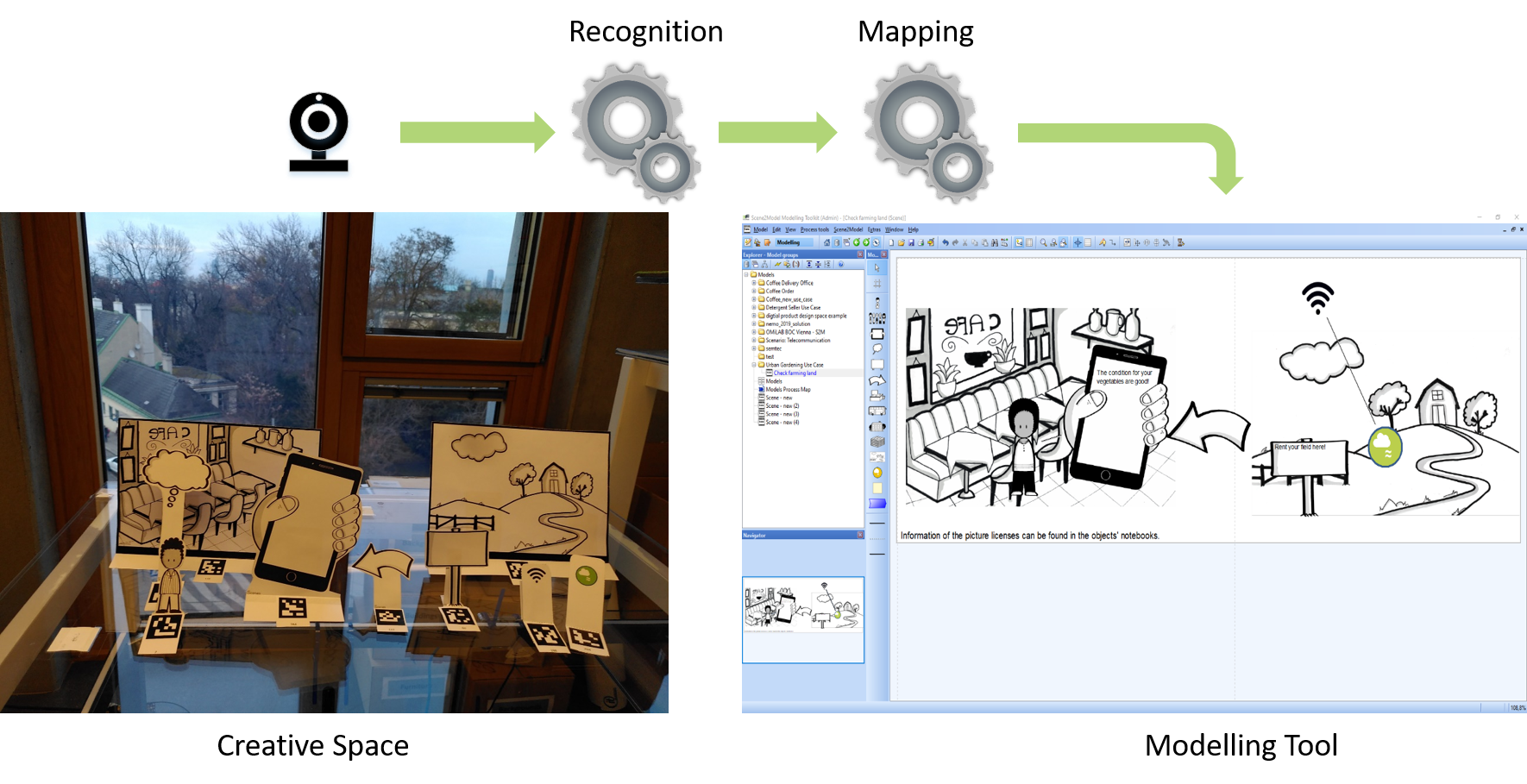

Oriented on physical workshops in Design Thinking, Scene2Model offers an environment to support such workshops by an automatic creation of Digital Twins from Design Thinking. Meaning that haptic objects (e.g., paper figure, sticky notes), which are used during the workshop to externalise and visualize the ideas, are recognised, enhanced and transformed into digital conceptual models. These models make the designed idea understandable to humans and machines, improving the utilization of created knowledge.

Details to the tool and the download can be found at: scene2model.omilab.org

Scene2Model

Developed initially in the context of the DIGITRANS project by the OMiLAB@University of Vienna node, and since then further developed within OMiLAB in the DigiFoF and FAIRWork EU-funded projects, Scene2Model is a modelling environment combining physical and digital modelling. This is achieved by offering at its core an ADOxx-based modelling tool, supporting the creation and usage of various domain-specific Scene2Model icon libraries. The offering of multiple usage settings enables a tailored set-up of the environment to recognize haptic objects and automatically transform them into a digital, conceptual model.The physical modelling approach uses paper figures to represent the design idea and is based on SAP ScenesTM.

The graphic contains figures from SAP ScenesTM and is based on a graphic from Miron, E.-T., Muck, C., Karagiannis, D., & Götzinger, D. (2018). Transforming Storyboards into Diagrammatic Models. In P. Chapman, G. Stapleton, A. Moktefi, S. Perez-Kriz, & F. Bellucci (Eds.), Diagrammatic Representation and Inference (pp. 770–773). Springer International Publishing.

The graphic contains figures from SAP ScenesTM and is based on a graphic from Miron, E.-T., Muck, C., Karagiannis, D., & Götzinger, D. (2018). Transforming Storyboards into Diagrammatic Models. In P. Chapman, G. Stapleton, A. Moktefi, S. Perez-Kriz, & F. Bellucci (Eds.), Diagrammatic Representation and Inference (pp. 770–773). Springer International Publishing.

Scene2Model Environment

At the heart of the environment sits the Scene2Model modelling tool, but to enable the usage in a workshop environment, additional components are needed. A side from the physical space, technical components must be set up to seamlessly work with the physical and digital part.The generic, additionally needed components are:

- Physical Design Space: A physical environment where the workshop participants can work, which is used to place the paper figures. It is recommended to have a table with the space of about 80cmx80cm and workshop participants should be able accesses from three sides. For some settings, a camera must be positioned in this environment. The concrete description can be found in the description of the corresponding setting.

- Paper Figures: In the physical workshop, paper figures are used to visually design the novel idea, created by the participants. The paper figures show representations of the concepts used to describe the scenario, e.g., humans, cars, building, drones, robots and so on. The figures must be tailored to the concrete domain of the workshop, so that the participants can easily interpret them and assign meaning to them.

- Image Provider: The first step in transforming the haptic design into a digital one is to take a picture of the positioned paper figures, which can be processed. Therefore, a camera must be positioned to take a picture of the paper figures, and a device must be connected offering this picture to the recognition component.

- Recognition Component: This software component processes the provided picture and identifies the used concepts and the relative position on the picture. It then returns an identifier of the recognised figure and its coordinates so that the digital models can be created.