Developed initially in the context of the DIGITRANS project by the OMiLAB@University of Vienna node, the Scene2Model tool supports haptic modelling through paper figures and an automated transformation to digital conceptual models by applying image/QR recognition and semantic technologies. Since then in it was further developed within OMiLAB in the DigiFof and FAIRWork EU-funded projects.

Scene2Model Environment

Scene2Model aims at supporting physical design thinking workshops, by allowing to capture the created knowledge in digital conceptual models. The unique approach here is that a physical and digital modelling are supported. In the physical workshop haptic creative methods should be applied to foster co-creation between the workshop's participants. The Scene2Model tool then allows an automated transformation form the haptic results of the creative method into a digital conceptual model.To achieve this the physical environment must be prepared, the Scene2Model modelling tool must be installed and the recognition environment must be set-up. The recognition environment consists of camera, taking pictures of the physical environment and the software to process the pictures and provide them to the modelling tool.

The physical modelling approach uses paper figures to represent the design idea and is based on SAP ScenesTM.

This research project aims to use neural networks to train and recognize modelling objects, specifically for the case of design thinking within Scene2Model.

Use of Neural Networks with Scene2Model: A Case for Design Thinking

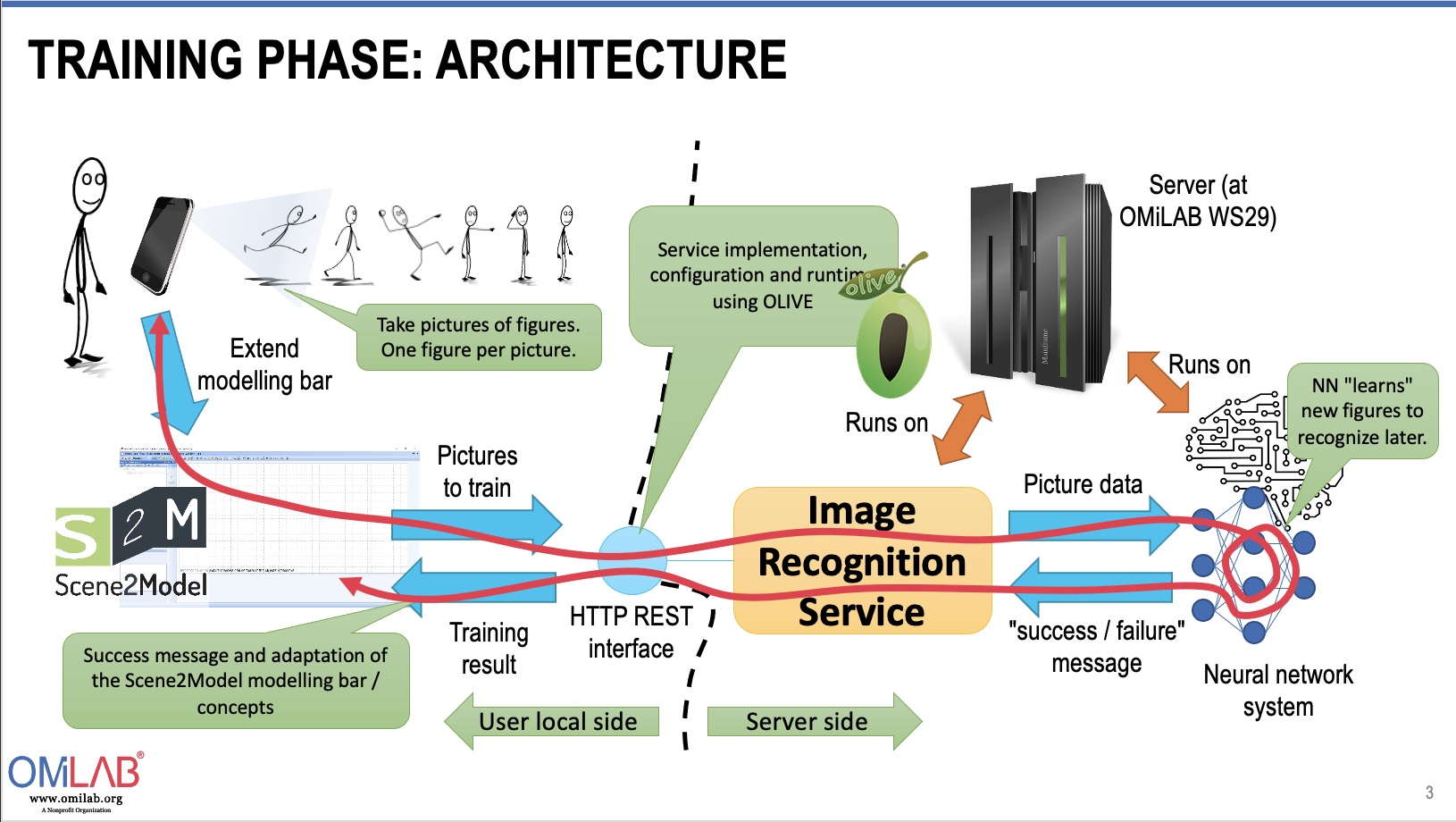

Training Phase:

- Add concepts for figures that can be relevant for the recognition phase to the Scene2Model modelling bar.

- Train image recognition service to recognize the images in a scene that is created during the recognition phase.

Recognition Phase:

- Create a physical scene with the available figures.

- Digitalize physical scene into Scene2Model tool using image recognition service.

Further features:

- Dynamically set the attributes available in the notebook of the concepts in Scene2Model (training and recognition phase).

- Identify figures in the scene that have not yet been trained or could not be properly identified with the training and provide recommendations to extend Scene2Model modelling bar (during recognition).

If you are interested to contribute, please get in touch with us at info@omilab.org.